Capacitor Plugin for Text Detection Part 4 : Using the Plugin

This is part 4/6 of the "Capacitor Plugin for Text Detection" series.

In the previous post, we cleaned up the web implementation of the plugin and added a shim between our plugin and client code. In this post, let's look into actually using the plugin, via the shim we added.

Using the Plugin

Navigate to the sample app, ImageReader and open ImageReader/src/app/home/home.page.ts.

We can import our plugin like

import { TextDetector, TextDetection, ImageOrientation } from 'cap-ml';

Without the shim we wrote in the previous post, we'd have to import the plugin like this instead

import { Plugins} from `@capacitor/core`;

const CapML = Plugins;

To select a photo from the device's gallery, we'll use another Capacitor Plugin, Camera and pass the resultant filepath of the image to our plugin.

Import Camera plugin

import { Plugins, CameraSource, CameraResultType, CameraPhoto } from '@capacitor/core';`

and use it in our code like

Camera.getPhoto({

resultType: CameraResultType.Uri,

source: CameraSource.Photos,

}).then(async imageFile => {

this.imageFile = imageFile;

const td = new TextDetector();

const textDetections = await td.detectText(imageFile.path!);

}).catch(error => console.error(error))

}

textDetections will be the array of text detections we saw in our iOS Plugin post

Here's the complete implementation of home.page.ts.

import { Component, OnInit } from '@angular/core';

import { Plugins, CameraSource, CameraResultType, CameraPhoto } from '@capacitor/core';

import { TextDetector, TextDetection, ImageOrientation } from 'cap-ml';

const { Camera } = Plugins;

@Component({

selector: 'app-home',

templateUrl: 'home.page.html',

styleUrls: ['home.page.scss'],

})

export class HomePage implements OnInit {

private textDetections: TextDetection[];

constructor() {}

ngOnInit() {

this.detectTextInImage();

}

async detectTextInImage() {

const loader = await this.loadingController.create({

message: 'Processing Image...',

})

// Using the capacitor Plugin Camera to choose a picture from the device's 'Photos'

Camera.getPhoto({

resultType: CameraResultType.Uri,

source: CameraSource.Photos,

}).then(async imageFile => {

this.imageFile = imageFile;

const td = new TextDetector();

this.textDetections = await td.detectText(imageFile.path!);

console.log(this.textDetections);

}).catch(error => console.error(error))

}

}

I just console logged the text detections for now, but towards the end of the series, we'll see how we can use the results to highlight sections of the image with text.

To test it out -

From the ImageReader directory, run npm run build && npx cap sync to copy over the javascript changes to the iOS platform.

Once the sync finishes, open up ImageReader in Xcode using npx cap open ios

Now since we're fetching an image from the device, we need to make sure the app has the corresponding permissions.

Open up the app's(ImageReader's) info.plist (not the info.plist in the plugin(cap-ml) directory) and add the following permissions

- Privacy - Camera Usage Description

- Privacy - Photo Library Additions Usage Description

- Privacy - Photo Library Usage Description

Run the app in XCode either in a simulator or a device with ios version 13.0 or higher (CoreML's text detection works only on ios 13.0 or higher).

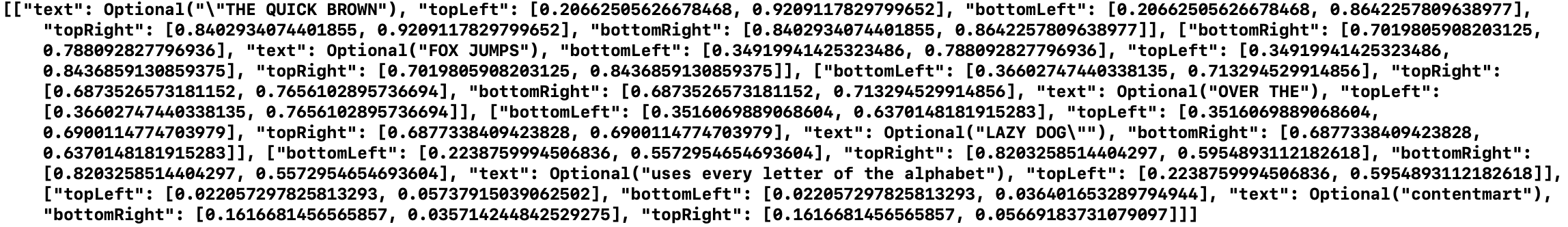

Pick an image with text in upright position. If you open up the console in XCode, you should now see an array of text blocks with bounding coordinates like this

Debugging on device or simulator

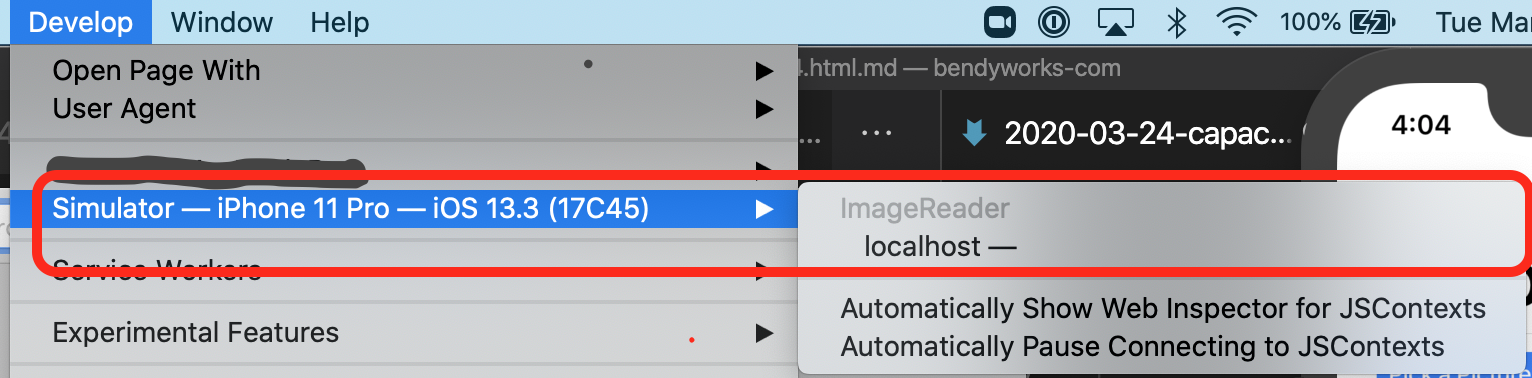

If you need to debug anything on the device/simulator, you can do so using Safari.

-

Run the app on a simulator/device

-

Open Safari

-

In Safari's menu bar, go to Develop > Simulator > click localhost

-

If you don’t see the Develop menu in the menu bar, choose Safari > Preferences, click Advanced, then select "Show Develop menu in menu bar."

In the next post, we'll implement the android plugin.